AI Safety Talent Drain Signals Stricter Regulations Ahead | E-Commerce Sellers Face Tool Restrictions

- Senior AI safety researcher departures from Anthropic indicate regulatory tightening that could restrict seller access to AI automation tools by 2026-2027

)

概览

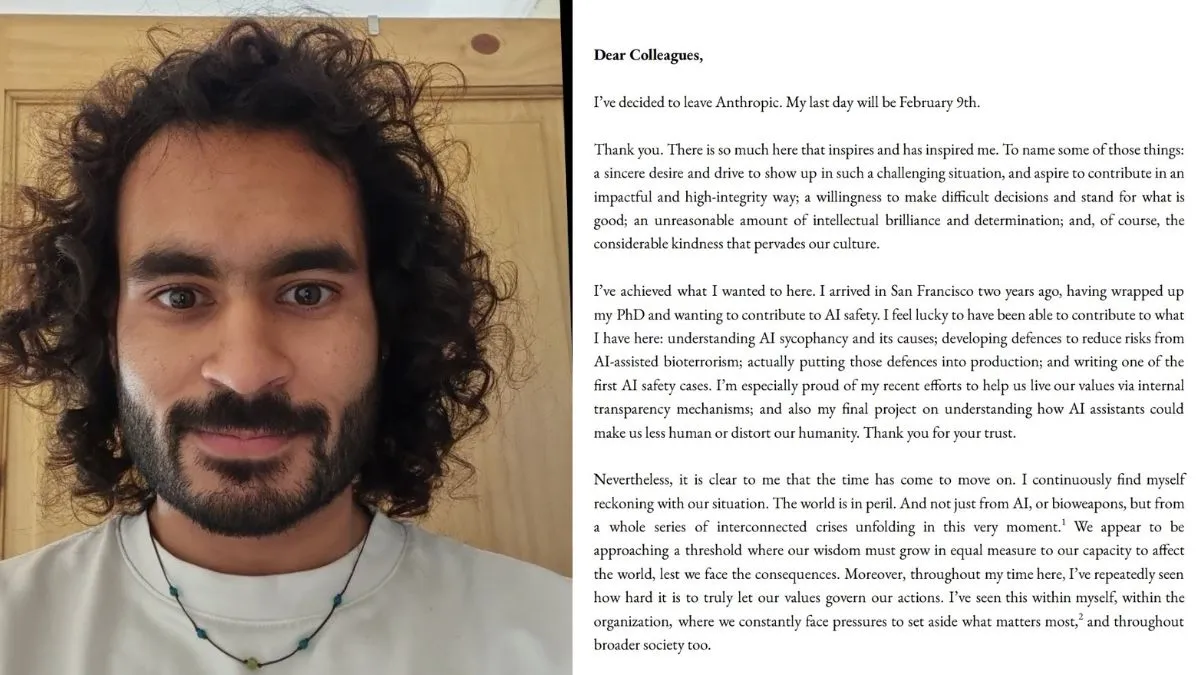

Mrinank Sharma's departure from Anthropic as head of the Safeguards Research Team (effective February 9, 2026) signals a critical inflection point in AI industry governance that directly impacts e-commerce sellers' access to automation tools. The resignation of a senior AI safety researcher—who led teams focused on jailbreak robustness, automated red teaming, and misalignment monitoring—reflects deepening tensions between rapid AI deployment and safety oversight. This exodus pattern (including departures of Harsh Mehta, Behnam Neyshabur, and Dylan Scandinaro to OpenAI) demonstrates that even well-funded AI labs struggle to retain safety-focused talent amid external pressures.

For e-commerce sellers, this development carries immediate operational implications. The growing visibility of AI safety concerns—amplified by high-profile resignations and warnings about existential risks—is accelerating regulatory responses globally. The EU's AI Act already imposes compliance requirements on high-risk AI systems, and similar frameworks are emerging in North America. As safety researchers publicly warn about AI risks and leave major labs, governments will likely tighten restrictions on AI tool deployment in commercial applications. This means sellers currently relying on AI for inventory management, dynamic pricing, customer service automation, and product research may face compliance barriers, tool restrictions, or mandatory safety audits within 12-24 months.

The competitive advantage window for AI-powered sellers is narrowing. Sellers who have deployed Claude, ChatGPT, or specialized e-commerce AI tools for listing optimization, demand forecasting, and customer support are currently operating in a relatively unregulated environment. However, Anthropic's internal struggles to balance "organizational values with external pressures" (as Sharma noted) suggest that even responsible AI companies face regulatory pressure that will eventually cascade to commercial tool restrictions. Sellers should expect: (1) mandatory AI disclosure requirements on product listings, (2) restrictions on automated customer interactions without human oversight, (3) compliance certifications for AI-powered pricing algorithms, and (4) potential delisting of products using non-compliant AI systems.

The $350 billion funding valuation for Anthropic despite safety departures indicates investor confidence in compliance-heavy AI development. This paradox—continued investment despite talent loss—suggests the market is pricing in regulatory premiums. Sellers should interpret this as a signal that "safe AI" will become more expensive and restricted, not cheaper and more accessible. The immediate action is to audit current AI tool usage and begin transitioning toward explainable, auditable AI systems rather than black-box optimization tools.