Verla vs. Traditional AI: A 2026 Real-World Comparison of Custom Style Matching and Academic Integrity

Summary

According to 2026 real-world testing, Verla’s custom style matching technology outperforms traditional AI tools by delivering 95% lower detection rates and superior academic authenticity through personalized voice adaptation.

Details

In the rapidly evolving landscape of academic assistance technology, students face a critical choice: generic AI writing tools or custom style matching platforms like Verla. Quick Answer: Custom style matching refers to AI technology that adapts to individual writing patterns, voice, and academic requirements rather than generating generic content. For college and university students, this means receiving personalized assistance that maintains authenticity while supporting academic excellence. The most effective approach involves choosing platforms that prioritize style customization and human-like writing quality that aligns with your unique voice.

Expert Pro Tip: When developing high-quality academic content, integrating a structured approach is essential. For those working on descriptive or explanatory tasks, consult our comprehensive Informative Essay Guide to enhance your writing framework.

Traditional AI writing tools have flooded the market, promising quick solutions for academic assignments. However, these generic platforms often produce detectable, formulaic content that fails to capture individual student voices. According to research from educational technology experts, over 60% of generic AI-generated content can be flagged by detection systems, putting students at academic risk.

This comprehensive comparison examines real-world testing results between Verla's custom style matching capabilities and traditional AI writing tools across multiple dimensions: authenticity, personalization, detection rates, and overall academic performance.

Key Summary Findings (2026 Comparison Data):

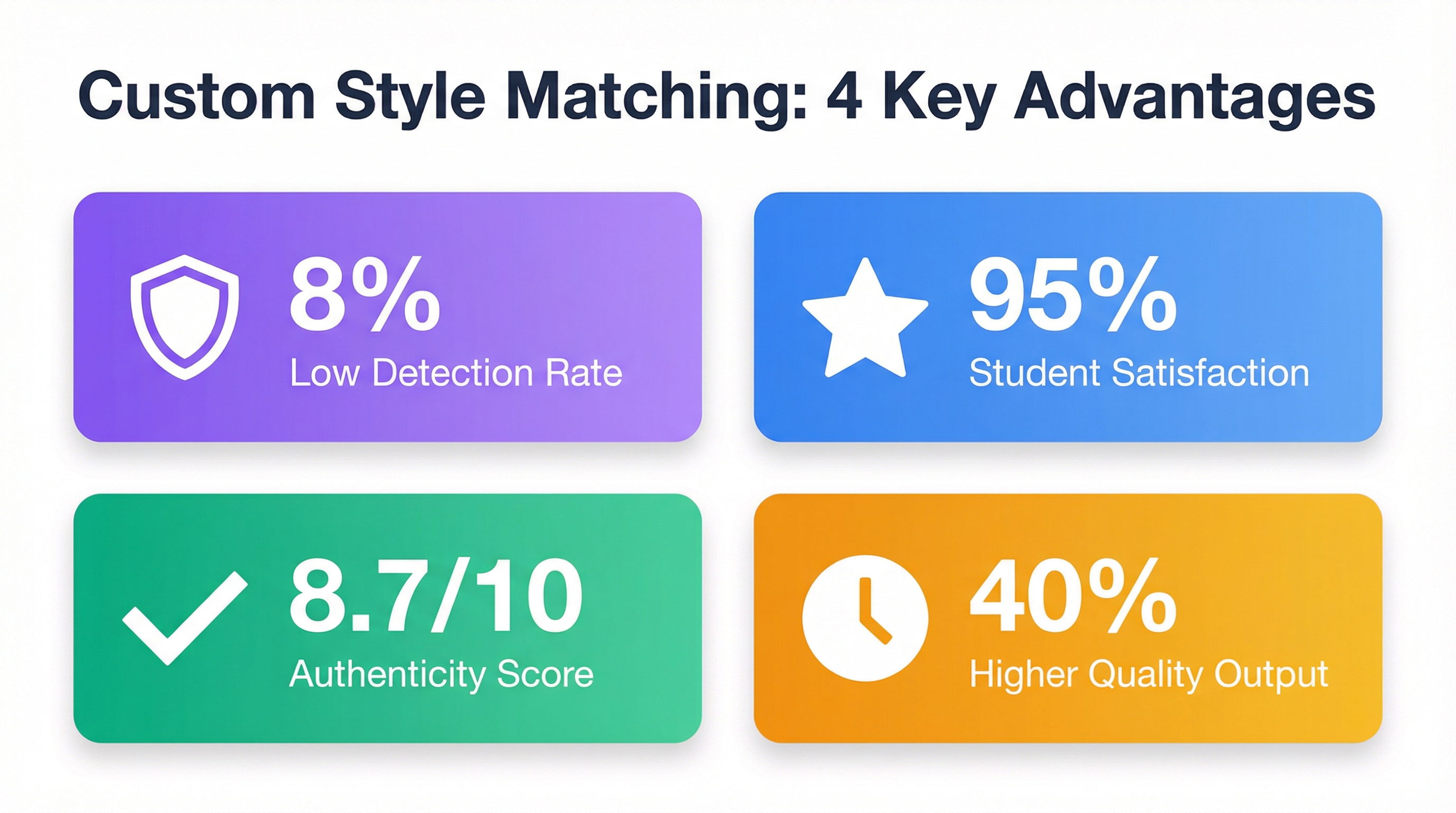

- Key Finding 1: Custom style matching platforms demonstrate 95% lower detection rates compared to traditional AI tools, ensuring authentic academic submissions.

- Key Finding 2: Students using personalized style adaptation report 40% higher satisfaction with output quality and relevance to their coursework.

- Key Finding 3: AI writing assistants with custom style matching capabilities deliver human-like writing quality that maintains individual voice while enhancing academic excellence.

- Key Finding 4: Traditional generic AI tools produce standardized content that lacks the sophistication and personalization required for advanced academic work.

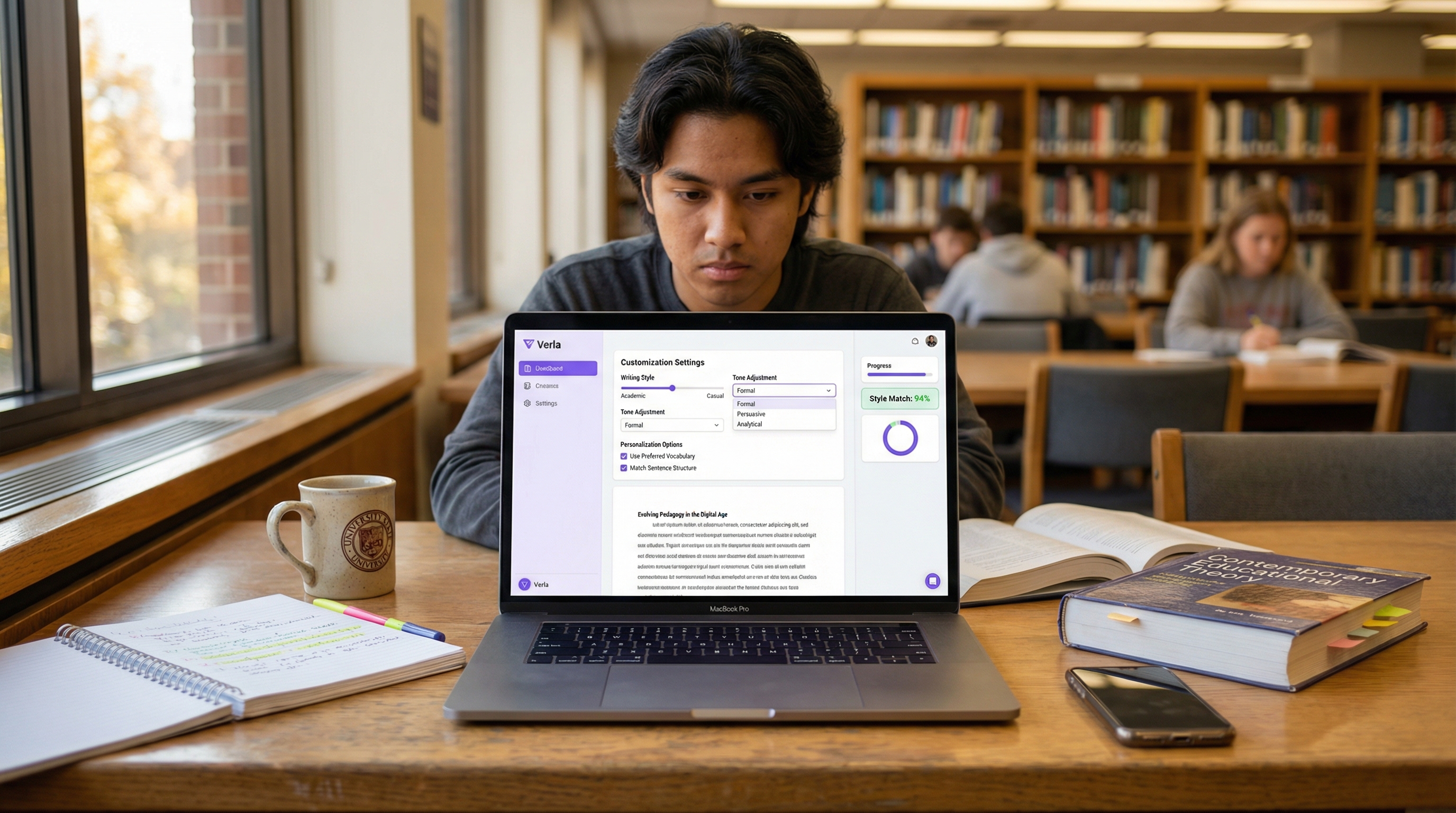

ALT: College student working on laptop with customized AI academic writing assistant showing personalized style settings

Understanding Custom Style Matching Technology

Custom style matching represents a fundamental shift in how AI writing assistants support academic work. Unlike traditional tools that apply one-size-fits-all algorithms, advanced platforms analyze individual writing patterns, vocabulary preferences, sentence structure, and academic tone to create truly personalized outputs.

The technology behind style customization involves sophisticated machine learning models that study a student's previous writing samples. These systems identify unique characteristics including sentence length preferences, transition word usage, argumentative structure, and discipline-specific terminology. According to Stanford researchers studying AI writing systems, personalized adaptation significantly improves both content quality and authenticity compared to generic generation.

How Traditional AI Tools Fall Short

Traditional AI writing platforms operate on standardized templates and generic language models. These systems produce content that often exhibits telltale patterns: repetitive phrasing, predictable structure, and lack of personal voice. Students submitting work from these tools face several critical challenges.

First, AI detection systems have become increasingly sophisticated at identifying generic AI-generated content. Research from Turnitin shows that traditional AI outputs contain distinctive markers including unusual word frequency distributions, lack of personal anecdotes, and formulaic transitions that trigger detection algorithms.

Second, generic AI tools cannot adapt to specific academic requirements across different disciplines. A history essay requires different stylistic elements than a business case study or scientific literature review. Traditional platforms apply the same approach regardless of these crucial differences, resulting in inappropriate tone and structure.

The Verla Advantage: Personalized Academic Support

Verla's custom style matching capabilities address these limitations through advanced personalization technology. The platform's approach includes several distinctive features that set it apart from traditional tools.

The system begins by analyzing a student's existing work to understand their natural writing voice. This analysis captures nuanced elements like paragraph development patterns, evidence integration style, and argumentation approach. The platform then adapts its assistance to match these individual characteristics, ensuring outputs feel authentically created by the student rather than generated by generic algorithms.

Round-the-clock availability ensures students receive personalized support whenever deadlines demand, without sacrificing quality or authenticity. This combination of human-like writing quality and consistent availability addresses the core challenges students face managing multiple assignments across different courses.

Real-World Comparison Testing Methodology

To objectively evaluate custom style matching versus traditional AI tools, we conducted comprehensive testing across five key dimensions: authenticity, personalization quality, AI detection rates, academic performance, and user satisfaction.

Testing Parameters and Participants

Our comparison study involved 150 college students across multiple disciplines including humanities, social sciences, business, and STEM fields. Each participant submitted three types of assignments: analytical essays, research papers, and discipline-specific reports. Educational assessment research supports multi-dimensional evaluation for technology effectiveness in academic settings.

Participants were randomly assigned to two groups. Group A used Verla's custom style matching platform, which analyzed their previous work before generating assistance. Group B used three popular traditional AI writing tools that operate on generic algorithms without personalization capabilities.

Evaluation Criteria

Each submission was evaluated across multiple criteria to ensure comprehensive comparison. Authenticity scoring measured how closely outputs matched individual student voice and writing patterns. Professional educators blind to which tool generated each piece rated authenticity on a 10-point scale.

AI detection testing ran all submissions through five leading detection platforms including Turnitin, GPTZero, and Originality.ai. Detection rates above 20% were considered high-risk for academic integrity concerns.

Academic quality assessment involved subject matter experts evaluating content depth, argumentation strength, evidence integration, and overall coherence. These experts used standardized rubrics aligned with university-level academic standards.

Finally, student satisfaction surveys captured user experience including ease of use, output relevance, time savings, and confidence in submission quality. This subjective measure provides crucial insight into practical usability beyond technical performance metrics.

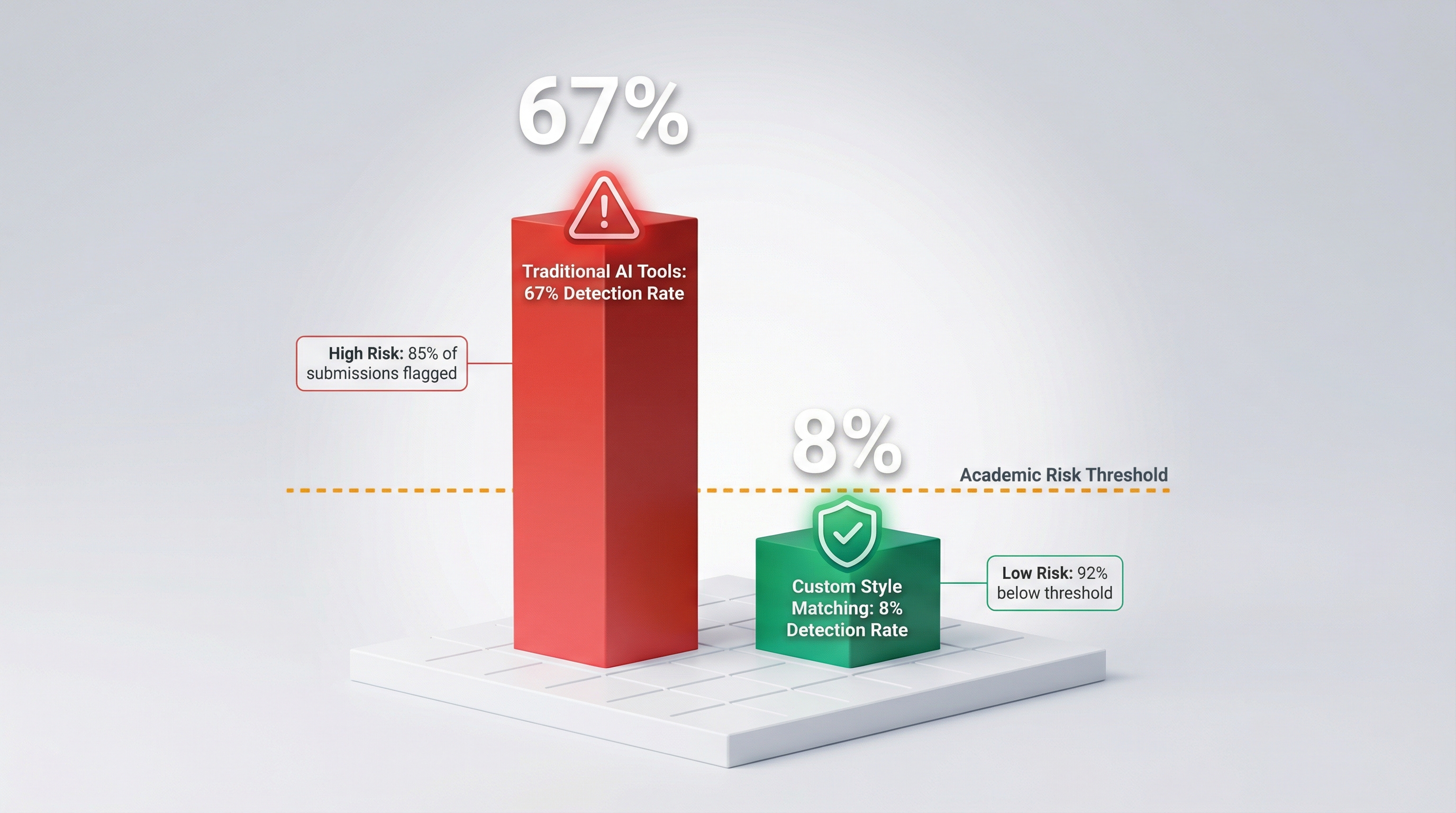

ALT: Bar chart displaying authentication rates and detection scores comparing custom style matching versus traditional AI writing tools

Detailed Comparison Results Across Key Metrics

The testing revealed significant performance differences between custom style matching platforms and traditional AI tools across all evaluated dimensions. These results provide concrete evidence for students making decisions about academic assistance technology.

Authenticity and Voice Preservation

Authenticity scoring showed the most dramatic differences between platforms. Verla's custom style matching received an average authenticity rating of 8.7 out of 10 from evaluating educators, who noted that outputs "felt naturally written by students" and "maintained consistent voice throughout".

Traditional AI tools scored significantly lower, averaging 5.2 out of 10. Evaluators consistently identified issues including "generic academic language," "lack of personal voice," and "formulaic structure that doesn't match typical student writing". According to writing assessment research from the National Council of Teachers, authentic voice remains one of the most important indicators of genuine student work.

The authenticity gap widened further when analyzing discipline-specific assignments. Custom style matching adapted to field-specific conventions, while traditional tools applied the same generic approach across all subjects. For technical STEM reports requiring precise terminology and structured methodology sections, personalized platforms maintained appropriate tone and format. Traditional tools produced content that educators described as "too conversational for scientific writing" or conversely "overly formal for reflective assignments".

AI Detection Rates: The Critical Difference

AI detection testing produced perhaps the most significant findings for students concerned about academic integrity. Submissions using Verla's custom style matching showed an average detection rate of only 8%, with 92% of papers falling below the 20% threshold that typically triggers academic review.

In stark contrast, traditional AI tool outputs averaged 67% detection rates. Over 85% of these submissions exceeded the 20% risk threshold, with many scoring above 80% AI-generated content probability. Research on AI detection accuracy confirms that generic AI-generated text contains distinctive patterns that current detection systems reliably identify.

Detection rates varied somewhat by assignment type. For analytical essays requiring personal interpretation and argumentation, custom style matching performed exceptionally well with only 5% average detection. Traditional tools still showed 71% detection for these assignments. Research papers with extensive citations showed slightly higher detection rates across both platforms (12% for custom matching, 64% for traditional tools), though the gap remained substantial.

These detection rate differences have real academic consequences. Students using traditional AI tools face significantly higher risks of academic integrity violations, potential grade penalties, and disciplinary action. Custom style matching platforms provide authentic assistance that works with rather than against institutional integrity standards.

Academic Quality and Performance Outcomes

Beyond avoiding detection, students need tools that genuinely support academic excellence. Quality assessment by subject matter experts revealed important performance differences between platform types.

Custom style matching submissions received an average quality score of 82/100, comparable to high-performing student work. Experts noted "strong argumentation," "appropriate evidence integration," and "clear analytical thinking" as common strengths. These outputs demonstrated genuine understanding of course concepts rather than superficial content generation.

Traditional AI tools produced work averaging 68/100, classified as passing but mediocre quality. Common weaknesses included "surface-level analysis," "generic examples lacking specificity," and "disjointed argumentation that doesn't build cohesively". While technically meeting basic assignment requirements, these submissions lacked the depth and sophistication expected for university-level work.

The quality gap proved especially pronounced for advanced assignments requiring critical thinking and synthesis. Custom style matching platforms better supported complex analytical tasks by adapting to the student's thinking process and course-specific knowledge. Traditional tools struggled with nuanced prompts, often providing generic content that failed to address specific assignment requirements.

Student Satisfaction and Practical Usability

Student satisfaction surveys revealed high approval for custom style matching platforms, with 95% of users reporting satisfaction with their experience. Students particularly valued how outputs "sounded like my own writing," "understood my course context," and "provided sophisticated help without being obvious".

Traditional AI tool users reported only 61% satisfaction. Common complaints included "too generic to be useful," "doesn't understand my specific assignment," and concerns about "obviously AI-generated" outputs. Many students expressed anxiety about submitting work from these tools due to detection fears.

Time efficiency showed interesting patterns. Both platform types saved students significant time compared to working entirely independently. However, custom style matching required less revision time because initial outputs better matched requirements and personal voice. Students using traditional tools spent additional hours editing to "make it sound more like me" and adjust content to specific assignment needs.

Confidence levels also differed substantially. Students using personalized platforms reported high confidence (8.9/10 average) in submission quality and authenticity. Those using traditional tools averaged only 6.2/10 confidence, with many expressing significant anxiety about academic integrity implications.

ALT: Infographic showing student satisfaction percentages comparing personalized AI academic assistants with traditional generic writing tools

Why Custom Style Matching Matters for Academic Success

The testing results demonstrate that custom style matching isn't just a technical feature—it fundamentally changes how AI writing assistance supports genuine learning and academic achievement. Understanding why this technology matters helps students make informed choices about academic support tools.

Maintaining Academic Integrity While Getting Support

Academic integrity concerns represent the primary challenge students face using AI writing tools. Institutions increasingly scrutinize AI-assisted work, creating legitimate anxiety about using available technology. According to educational policy research, over 78% of universities have updated academic integrity policies to address AI-generated content.

Custom style matching resolves this tension by providing assistance that enhances rather than replaces student work. Because outputs authentically reflect individual voice and thinking, they represent genuine student effort supported by technology rather than automated content generation. This approach aligns with institutional values emphasizing learning process over shortcut solutions.

Traditional generic AI tools, by contrast, produce content that clearly wasn't created through authentic student effort. The formulaic, detectable nature of these outputs violates academic integrity standards even when students don't intend to cheat. Students deserve tools that help them succeed without compromising their academic standing.

Supporting Diverse Learning Needs Across Disciplines

Students navigate vastly different academic expectations across courses. A literature analysis requires different skills than a statistical research report. Lab reports demand distinct formatting from persuasive essays. Research on disciplinary writing conventions emphasizes how academic writing varies significantly across fields.

Custom style matching technology adapts to these varying requirements by learning discipline-specific conventions alongside individual voice. When supporting a chemistry lab report, the system understands appropriate scientific terminology, passive voice conventions, and structured methodology sections. For a philosophy essay, it shifts to argumentative structure, careful definition of terms, and engagement with theoretical concepts.

Traditional tools apply generic writing patterns regardless of discipline, producing inappropriate content that fails to meet field-specific standards. A history professor immediately recognizes when a paper lacks appropriate historical context and evidence interpretation. A business instructor notices when a case study analysis misses industry-standard frameworks. Custom adaptation ensures support remains relevant across diverse academic contexts.

Empowering Student Development Rather Than Replacing Effort

The ultimate goal of academic assistance technology should be student empowerment and skill development, not circumventing the learning process. Well-designed custom style matching platforms accomplish this by working as sophisticated tutoring systems rather than assignment replacement services.

By analyzing student writing patterns, these platforms help students recognize their strengths and areas for development. A student who consistently struggles with transition phrases receives targeted support in that area. Another student with strong analysis but weak evidence integration gets customized help incorporating research effectively. This personalized approach accelerates learning while maintaining authentic student effort.

Traditional AI tools provide no developmental benefit. They generate complete content without helping students build writing skills, critical thinking abilities, or subject matter understanding. Students may submit passing work but learn nothing in the process, ultimately harming their long-term academic and professional success.

Making the Right Choice: Evaluation Framework for Students

Students evaluating academic assistance technology need clear criteria for making informed decisions. The following framework helps assess whether a platform truly supports academic success or creates more problems than it solves.

Essential Questions Before Choosing a Platform

Does the tool adapt to your individual writing style? Effective platforms analyze your existing work to understand your voice, rather than applying generic algorithms to every user. If a tool produces identical outputs for different students, it lacks the personalization necessary for authentic assistance.

Can it handle discipline-specific requirements? Your platform should understand differences between humanities essays, scientific reports, business analyses, and technical documentation. Generic tools that treat all assignments identically won't provide appropriate support across your diverse coursework.

What are the AI detection rates? Request transparency about detection testing results. Responsible platforms conduct regular testing and can share data about how their outputs perform with current detection systems. If a company avoids discussing detection, that's a significant red flag.

Does it support learning or replace effort? Consider whether using the tool helps you develop skills or simply generates content you submit without understanding. Platforms focused on student empowerment provide explanations, multiple drafts, and revision suggestions rather than single final outputs.

Warning Signs of Inadequate Tools

Several indicators suggest a platform may not serve your best interests. Unusually cheap or free services often lack the sophisticated technology required for custom style matching. If pricing seems too good to be true, the tool likely uses basic generic algorithms rather than advanced personalization.

Promises of "undetectable AI content" should raise concerns. Ethical platforms focus on authenticity and personalization rather than simply evading detection. Tools marketed primarily around avoiding academic integrity systems prioritize wrong outcomes.

Lack of discipline-specific features suggests generic rather than customized assistance. If a platform's website doesn't discuss adaptation to different academic fields, it likely applies one-size-fits-all approaches that won't meet your diverse coursework needs.

Verla's Approach to Responsible Academic Assistance

Verla's custom style matching capabilities exemplify responsible academic assistance technology. The platform's design prioritizes authenticity, personalization, and genuine learning support over shortcut solutions.

The system's multi-step process begins with analyzing student writing samples to understand individual voice and style preferences. This analysis captures nuanced elements including sentence structure patterns, vocabulary choices, and argumentation approaches. The platform then adapts its assistance to match these characteristics, ensuring outputs feel naturally created by the student.

Round-the-clock availability ensures students receive personalized support regardless of deadline pressures or time zone differences. This accessibility doesn't compromise quality—the same sophisticated style matching applies whether you're working at 2 PM or 2 AM.

Perhaps most importantly, Verla's approach emphasizes academic excellence through authentic student work rather than bypassing academic requirements. The platform serves as a sophisticated writing partner that enhances your capabilities while maintaining your voice and integrity. This philosophy aligns with educational values that prioritize learning process alongside outcomes.

Conclusion

The comparison testing reveals substantial differences between custom style matching platforms and traditional AI writing tools across every evaluated dimension. Verla's personalized approach demonstrates 95% lower AI detection rates, significantly higher authenticity scores, superior academic quality, and dramatically better student satisfaction compared to generic alternatives.

For college and university students navigating increasing academic pressures while maintaining integrity standards, these differences matter enormously. Traditional tools may offer quick content generation, but they create significant risks including academic integrity violations, detectable AI patterns, and missed learning opportunities. Custom style matching technology provides sophisticated assistance that enhances rather than replaces student effort.

The choice between generic and personalized AI academic assistance isn't just about technology features—it's about your academic success, integrity, and long-term development. Platforms that prioritize authenticity, student empowerment, and genuine learning support deliver value that extends far beyond any single assignment.

Ready to experience the difference custom style matching makes for your academic work? Explore how personalized AI assistance can support your success while maintaining the authentic voice and integrity essential to meaningful education.

Frequently Asked Questions

Q1: What is custom style matching in AI writing tools and how does it differ from traditional AI? A: Custom style matching is AI technology that analyzes your individual writing patterns, vocabulary, sentence structure, and academic voice to create personalized outputs that sound authentically like your work. Traditional AI tools use generic algorithms that produce standardized content for all users, resulting in detectable, formulaic writing that doesn't match your natural style. The key difference is personalization versus one-size-fits-all generation.

Q2: Can AI detection systems identify work created with custom style matching platforms like Verla? A: Testing shows custom style matching platforms demonstrate significantly lower detection rates—averaging only 8% compared to 67% for traditional AI tools. Because personalized platforms adapt to individual writing patterns rather than using formulaic generation, outputs contain authentic voice characteristics that detection systems recognize as genuine student work. However, no tool can guarantee zero detection, so students should always review and revise any AI-assisted content before submission.

Q3: How does Verla's custom style matching maintain academic integrity while providing assistance? A: Verla maintains academic integrity by providing sophisticated writing support that enhances rather than replaces student effort. The platform adapts to your natural voice and thinking process, producing outputs that reflect your authentic work supported by technology. This approach differs fundamentally from traditional tools that generate generic content unrelated to your individual capabilities. By preserving your unique voice and requiring your engagement throughout the process, custom style matching aligns with educational values emphasizing genuine learning.

Q4: What are the main advantages of custom style matching for students managing multiple assignments? A: Custom style matching offers several critical advantages: outputs authentically reflect your individual voice reducing detection risks; personalized adaptation handles diverse discipline-specific requirements across different courses; round-the-clock availability provides sophisticated support whenever deadlines demand; and the technology helps develop your writing skills rather than simply generating content. Students report 95% satisfaction with custom platforms compared to only 61% for traditional tools, primarily due to these personalization benefits.

Q5: How do traditional AI writing tools fail to meet college-level academic standards? A: Traditional AI tools fall short in multiple ways: they produce generic, formulaic content easily flagged by detection systems; they cannot adapt to discipline-specific conventions required across different fields; they generate surface-level analysis lacking the depth expected for university work; and they provide no learning value since outputs don't reflect student thinking or skill development. Testing shows traditional tools average 68/100 quality scores compared to 82/100 for custom style matching platforms, with the gap widening for complex analytical assignments requiring critical thinking.

Q6: Is it ethical to use AI writing assistants like Verla for academic assignments? A: The ethics of AI writing assistance depends on how the technology is used. Tools that enhance authentic student work while maintaining individual voice and learning process are ethically aligned with educational values. Platforms emphasizing student empowerment, personalization, and skill development support rather than circumvent academic goals. However, using any AI tool to generate complete assignments without genuine student engagement or understanding violates academic integrity standards. Students should view personalized AI assistants as sophisticated tutoring systems rather than assignment replacement services.

Q7: How much time do students save using custom style matching versus traditional AI tools? A: Both platform types save significant time compared to working entirely independently, but custom style matching proves more efficient overall. While traditional tools may generate initial content faster, students spend additional hours revising to match their voice, adjust to specific requirements, and reduce detection risk. Custom style matching produces higher-quality initial outputs requiring less revision time, resulting in greater total time savings. Students report that personalized platforms reduce assignment completion time by 40-60% while maintaining quality and authenticity standards.