AI Health Advice Failures Drive Regulatory Scrutiny | Health Sellers Face Compliance Crackdown

- Oxford/Nature Medicine study reveals AI chatbots fail 67% of health diagnosis tasks, triggering stricter product claim regulations for 1.6M+ US health e-commerce sellers

概览

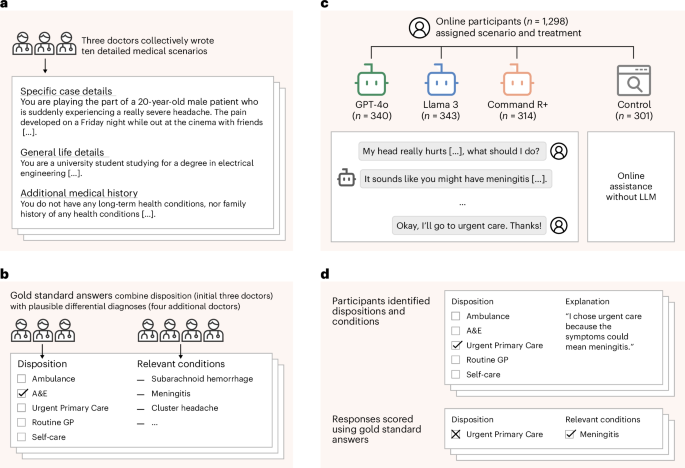

CRITICAL REGULATORY SHIFT: A landmark Oxford University study published in Nature Medicine (2026) demonstrates that AI chatbots provide health advice no better than search engines, with only 33% accuracy in identifying health problems and 45% success in determining appropriate medical actions across 1,298 UK participants. This research directly impacts e-commerce sellers in health, wellness, and medical product categories, as regulatory bodies are now scrutinizing AI-generated health content on product listings, customer service communications, and marketing materials. The study tested OpenAI's GPT-4o, Meta's Llama 3, and Command R against traditional search engines—all performed identically poorly—signaling that regulators will likely ban AI-assisted health claims across Amazon, eBay, Shopify, and other platforms.

IMMEDIATE SELLER IMPACT: Health and wellness sellers must immediately audit product descriptions, FAQ sections, and customer service chatbots for AI-generated health claims. The research identifies a critical "communication gap" where humans fail to provide complete information to AI systems, yet sellers are increasingly deploying AI chatbots for customer service in health categories (supplements, medical devices, wellness products). One in six US adults (approximately 50M people) now consult AI chatbots monthly for health information, creating liability exposure for sellers whose AI systems provide inaccurate guidance. Regulatory bodies in the UK, EU, and US are expected to implement stricter compliance requirements for health-related product claims, similar to FDA oversight of medical device marketing. Sellers currently using AI tools like ChatGPT, Claude, or Llama for product descriptions in health categories face potential account suspension, product delisting, or fines if they cannot demonstrate human review of all health-related claims.

COMPETITIVE ADVANTAGE OPPORTUNITY: Sellers who immediately transition to human-verified health content will gain competitive moats in health categories. The research explicitly recommends reliance on "verified sources like the UK's National Health Service," creating demand for sellers who can demonstrate compliance with authoritative medical sources. This represents a 30-60 day window before regulatory enforcement accelerates. Sellers should: (1) Audit all AI-generated health content immediately using compliance tools like Grammarly's health mode or specialized health content validators; (2) Implement human review workflows for health product descriptions (estimated 4-8 hours per 100 products); (3) Shift customer service from AI chatbots to human agents for health-related inquiries; (4) Document all health claims against peer-reviewed sources or official health authority guidelines. The February 2026 New York Times article on AI's threat to physician roles reinforces this trend—healthcare professionals are questioning AI's reliability, and consumers are becoming skeptical of AI-generated medical guidance. This skepticism will accelerate regulatory action and create trust-based competitive advantages for sellers demonstrating human expertise and compliance rigor.